Unveiling the Power of Audio-Visual Early Fusion Transformers with Dense Interactions through Masked Modeling

Abstract

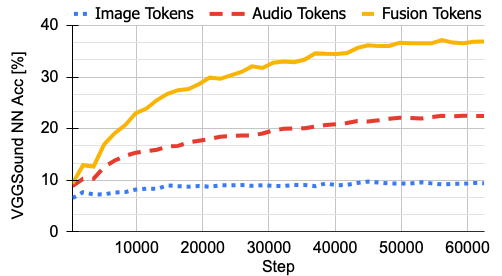

Humans possess a remarkable ability to integrate auditory and visual information, enabling a deeper understanding of the surrounding environment. This early fusion of audio and visual cues, demonstrated through cognitive psychology and neuroscience research, offers promising potential for developing multimodal perception models. However, training early fusion architectures poses significant challenges, as the increased model expressivity requires robust learning frameworks to harness their enhanced capabilities. In this paper, we address this challenge by leveraging the masked reconstruction framework, previously successful in unimodal settings, to train audio-visual encoders with early fusion. Additionally, we propose an attention-based fusion module that captures interactions between local audio and visual representations, enhancing the model's ability to capture fine-grained interactions. While effective, this procedure can become computationally intractable, as the number of local representations increases. Thus, to address the computational complexity, we propose an alternative procedure that factorizes the local representations before representing audio-visual interactions. Extensive evaluations on a variety of datasets demonstrate the superiority of our approach in audio-event classification, visual sound localization, sound separation, and audio-visual segmentation. These contributions enable the efficient training of deeply integrated audio-visual models and significantly advance the usefulness of early fusion architectures.

Published at: Conf. on Computer Vision and Pattern Recognition (CVPR), Seattle, 2024.

Bibtex

@InProceedings{mo2024_efav,

title={Unveiling the Power of Audio-Visual Early Fusion Transformers with Dense Interactions through Masked Modeling},

author={Shentong Mo, Pedro Morgado},

booktitle={IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR)},

year={2024}

}